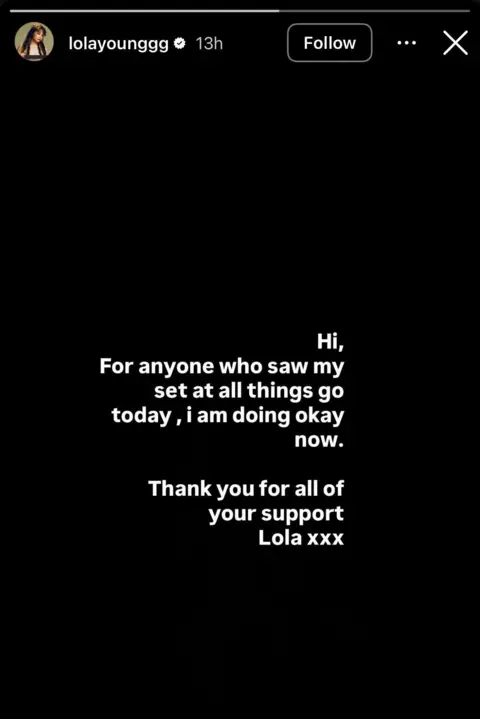

Instagram's tools designed to protect teenagers from harmful content are failing to stop them from seeing suicide and self-harm posts, a study has claimed.

Researchers also said the social media platform, owned by Meta, encouraged children to post content that received highly sexualised comments from adults.

The testing, by child safety groups and cyber researchers, found 30 out of 47 safety tools for teens on Instagram were substantially ineffective or no longer exist.

Meta has disputed the research and its findings, saying its protections have led to teens seeing less harmful content on Instagram.

This report repeatedly misrepresents our efforts to empower parents and protect teens, misstating how our safety tools work and how millions of parents and teens are using them today, a Meta spokesperson told the BBC.

Teen Accounts lead the industry because they provide automatic safety protections and straightforward parental controls.

The company introduced teen accounts to Instagram in 2024, saying it would add better protections for young people and allow more parental oversight.

The study into the effectiveness of its teen safety measures was carried out by the US research centre Cybersecurity for Democracy - and experts including whistleblower Arturo Béjar on behalf of child safety groups including the Molly Rose Foundation.

The researchers set up fake teen accounts and found significant issues with the tools.

They reported that only eight of the 47 safety tools analyzed were working effectively, meaning teens were being shown content that breached Instagram's own guidelines about what should be shown to young users.

This included posts describing demeaning sexual acts, as well as autocompleting suggestions for search terms promoting suicide, self-harm, or eating disorders.

These failings point to a corporate culture at Meta that puts engagement and profit before safety, said Andy Burrows, chief executive of the Molly Rose Foundation.

At an inquest held in 2022 regarding the death of Molly Russell, who took her life at 14, the coroner concluded she died as a result of the negative effects of online content.

The researchers presented their findings, which included evidence of young children under the age of 13 posting videos and engaging in risky behaviors driven by Instagram's algorithms.

Mr. Burrows criticized Meta's teen accounts as a superficial public relations stunt rather than a thorough and genuine attempt to resolve longstanding safety concerns on Instagram.

In response to the backlash, Meta stated that the research misinterpreted their content settings for teens and reaffirmed their commitment to improving safety measures on the platform.